Apple Exposes 5 Shocking AI Reasoning Limitations That Could Change the Industry

Apple Just Challenged the Core of AI: “They’re Not Thinking — Just Guessing”

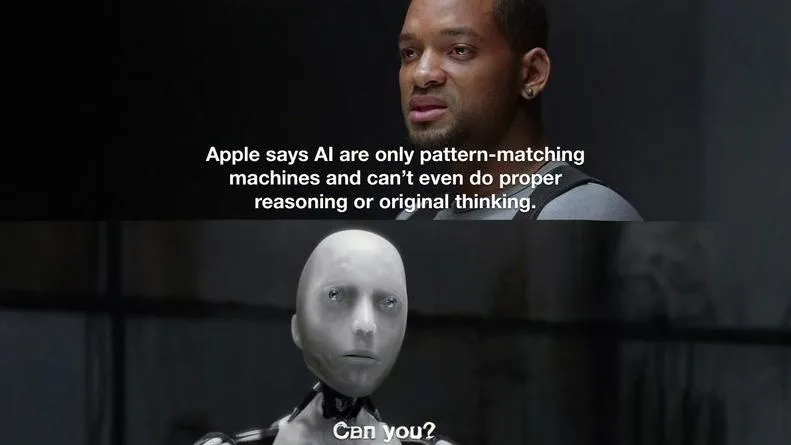

In a move that shook the AI community, Apple published a research paper called The Illusion of Thinking, which takes a bold stance on the AI reasoning limitations of today’s top models like Claude, DeepSeek-R1, and OLMo’s o3-mini.

While many believe that modern AI tools are capable of advanced reasoning, Apple’s research says otherwise: they’re just good at spotting patterns — and fail miserably when real thinking is required.

If you’re using AI in your work, product, or daily decision-making, this research is a must-read. Here’s a full breakdown — plus why this matters to every AI enthusiast, builder, and investor out there.

What Apple Tested and Why It Matters

The Core Focus: AI Reasoning Limitations in Fresh Logic Puzzles

Apple researchers designed brand-new logic puzzles that models had never seen before. The idea was simple: test if the AI could apply step-by-step reasoning to solve a problem, rather than just rely on memorized training data.

But the results revealed deep AI reasoning limitations. Even with clear, structured prompting (like “think step-by-step”), models often made the same kinds of mistakes humans stop making in middle school logic classes.

The AI Models Tested — And How They Performed

Let’s break down how individual models handled these tests.

Claude-3 (Anthropic)

- Known for strong long-form generation and accuracy

- Failed at multi-step logic that required conditional reasoning

- Often ignored parts of instructions or contradicted its own statements

DeepSeek-R1

- A top open-weight Chinese model often praised for academic tasks

- Performed slightly better in structured arithmetic

- Still struggled with fresh logic puzzles and abstract instruction-following

OLMo (o3-mini)

- Designed as a transparent, open-source research model

- Lacked robust generalization when prompted with multi-part logical tasks

Across the board, AI reasoning limitations were exposed not in flashy benchmarks—but in seemingly “simple” reasoning puzzles that forced models to think beyond surface-level cues.

Why Benchmarks Are Not the Best Measure of Intelligence

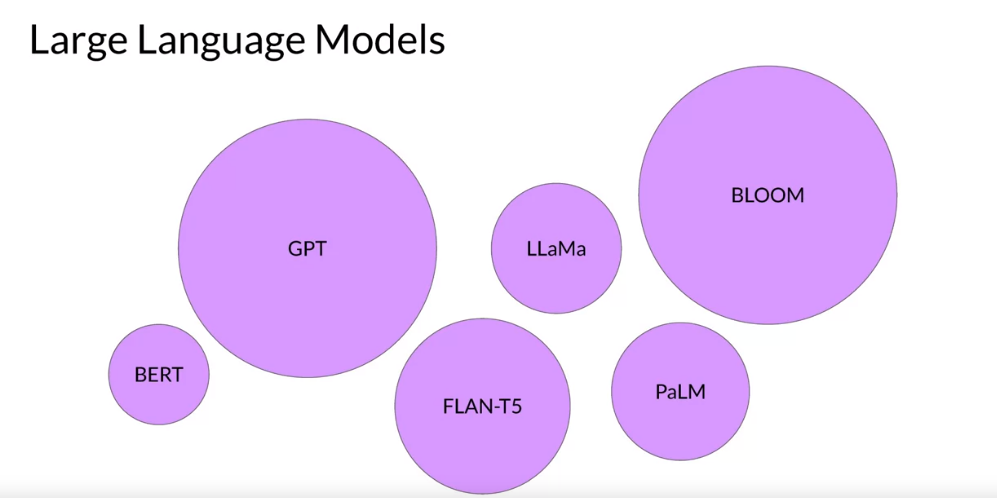

Many of the top models boast high scores on tests like:

- MMLU (Massive Multitask Language Understanding)

- GSM8K (Grade school math)

- ARC (AI2 Reasoning Challenge)

But these benchmarks often:

- Contain repeated patterns or public examples

- Allow models to exploit statistical correlations

- Encourage overfitting to test sets

Apple argues that these benchmarks don’t reflect real-world AI reasoning limitations, because they reward familiarity, not true cognition.

4 Key Insights from Apple’s Paper

1. Reasoning Is Not Pattern Recognition

What Apple proved is that pattern recognition isn’t enough. Real reasoning means building mental models, holding variables in working memory, and following instructions — something current LLMs still can’t do reliably.

2. Step-by-Step Prompts Can’t Fix Fundamental Gaps

Chain-of-thought (CoT) prompting helps with structured thinking — but it’s not a silver bullet. Apple showed that even with CoT, AI reasoning limitations remain, especially on novel or abstract problems.

3. Today’s LLMs Provide an Illusion of Intelligence

They sound smart. They mimic the tone of a human thinker. But once pushed out of their training comfort zone, these models fail.

4. The Industry Is Prioritizing Flash Over Depth

Apple indirectly critiques the industry’s obsession with leaderboard scores, suggesting it’s time to focus on models that can genuinely reason, not just regurgitate.

Why Apple’s Timing Is Strategic

Apple’s not known for public AI wars — but this paper comes just as they prepare to launch Apple Intelligence, a personalized AI framework rumored to run on-device.

So the message is clear: “We’re not chasing benchmarks. We’re chasing true reasoning.”

By highlighting AI reasoning limitations, Apple is positioning itself as the pragmatic thinker in a noisy, hype-filled AI market.

Real-World Examples of AI Reasoning Limitations

Example 1: Logical Puzzle Fail

Prompt: “If A is taller than B, and B is taller than C, who is the shortest?”

Output: “A is the shortest.” ❌

Even with multiple clarifications, models consistently got this wrong.

Example 2: Negation Confusion

Prompt: “Which of the following is NOT true?”

Output: A true statement labeled as the answer. ❌

This shows a failure to process negation, a core reasoning challenge that LLMs still struggle with.

Industry Response — Is Apple Just Throwing Shade?

Some developers and AI researchers pushed back, suggesting:

- Apple hasn’t released a competitive LLM yet

- The tests may be “overly hard” or cherry-picked

- Modern prompting or fine-tuning could improve results

But others agree it’s time to stop pretending LLMs are general reasoners. As Gary Marcus tweeted:

“We’re calling them ‘reasoning machines’ when they’re basically auto-completers with attitude.”

What This Means for You as an AI User

If you’re building or using AI tools, you need to:

✅ Be cautious with critical decisions: Don’t use unverified LLM outputs for health, finance, or law.

✅ Use layered systems: Combine AI with logic checkers, human oversight, or symbolic reasoning.

✅ Know the tool’s limit: Great for summarization, brainstorming, or automation — not for unassisted reasoning under ambiguity.

Understanding AI reasoning limitations makes you a more responsible and effective user or builder.

How to Design Around AI Reasoning Limitations

Here’s what top builders are doing:

- Prompt engineering to limit ambiguity

- Rule-based logic layers for validation

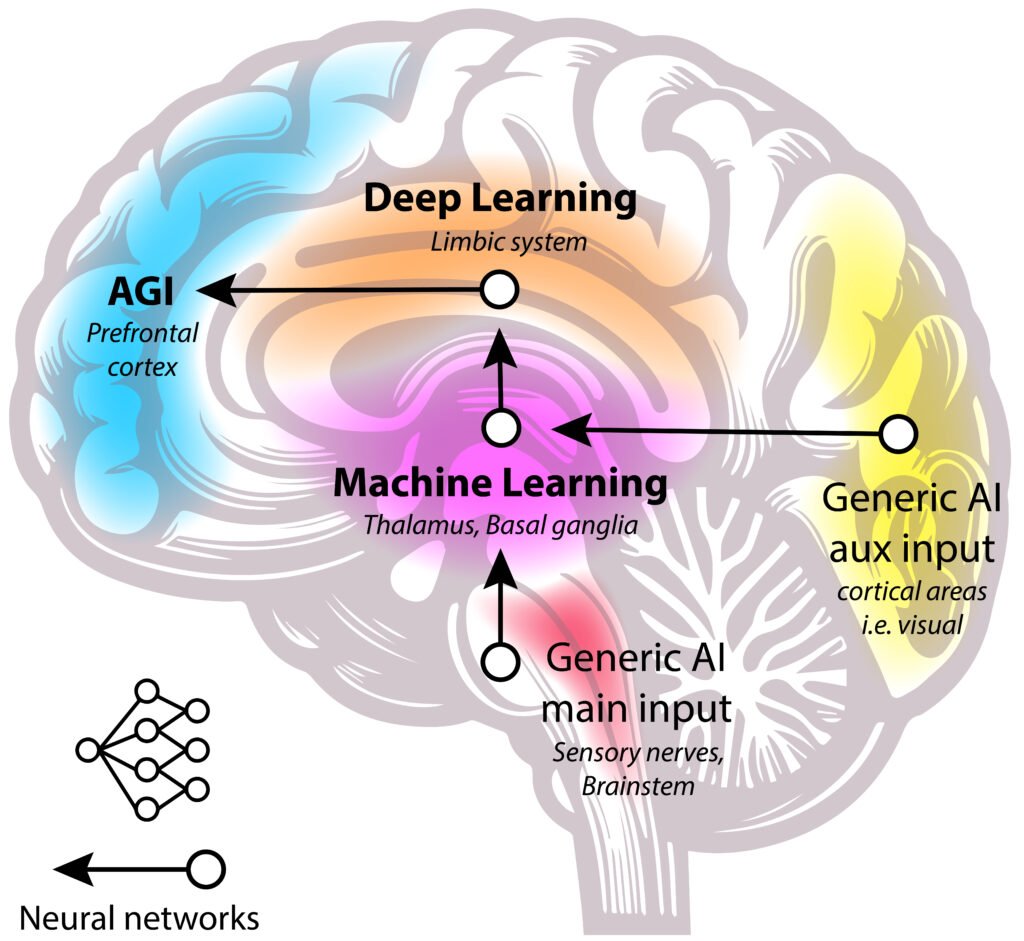

- Hybrid architectures that mix neural + symbolic systems

- Fine-tuning with diverse reasoning datasets (e.g., BigBench-Hard)

This is especially crucial for AI agents, copilots, and autonomous assistants.

Link to Full Apple Paper

👉 Read Apple’s research: The Illusion of Thinking

AI Reasoning Limitations Will Shape the Future of Trustworthy AI

This moment marks a critical shift in the AI narrative. Apple’s work is less about criticism and more about calling for a new direction.

Instead of building AIs that “feel smart,” the future will favor systems that:

- Think reliably

- Admit uncertainty

- Deconstruct problems logically

And that’s how we’ll move from autocomplete engines to true artificial intelligence.

Final Thoughts — What’s Next?

Apple’s research is a wake-up call. And whether you’re building AI agents, using GPT-4, or just automating your workflow — you now know what to look for.

The next frontier in AI isn’t more data. It’s better reasoning.

Let’s build for that.

📩 Stay Ahead: Subscribe to the AI-Cimplified Newsletter

Want breakdowns like this — minus the jargon?

Our weekly newsletter delivers:

- Real AI news (not hype)

- Tool reviews and prompts that work

- Deep dives like this one

- Monetization tips for AI creators

👉 Click here to subscribe — it’s free and trusted by 5,000+ AI pros.

`